Grilled Stuft Burritos and Learning Curves

Remember the grilled stuft burrito at Taco Bell? Before it got XL’d? Or XXL’d? Thing of beauty. Just under a pound of sketchy beef, cheese, tortilla strips, and crunchy casing. It came with a lil order of nachos so insubstantial it was probably fraud to call it a side. But you could dip the burrito in the nacho cheese and gaze upon the face of God. Anyway, just thinking about that burrito today I guess.

Breunig M, Chelf C, Kashiwagi D. Point-of-Care Ultrasound Psychomotor Learning Curves: A Systematic Review of the Literature. J Ultrasound Med. Published online May 7, 2024. doi:10.1002/jum.16477

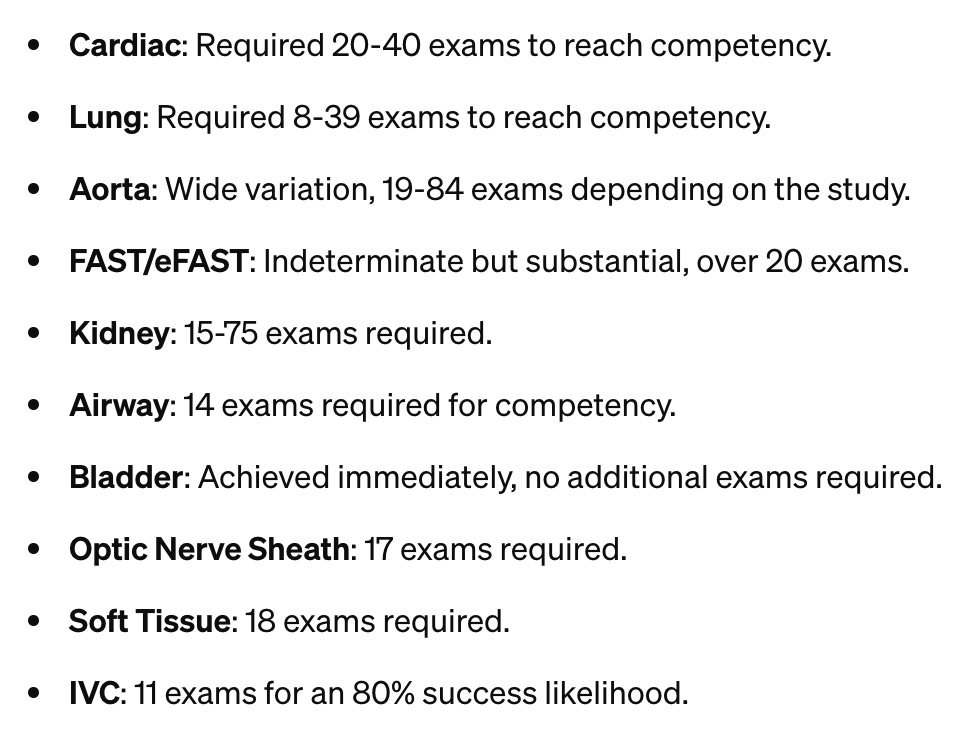

This is a review germane to what I do as a POCUS educator. How many scans is enough? Don’t learners progress at different rates? Do I need to test learners that hit a certain mark to ensure competence? How do you test them? Is it different for different organs? Studies in this review were really heterogeneous on all fronts - number of learners, type of learners, amounts of instruction, endpoints used, etc - but at least we’re ballparking it and the numbers aren’t so far from those suggested by ACEP and SHM/CHEST for some important scans like heart and lung. Table 1 in the article breaks down each paper and its specifics, but here’s what they found with regards to numbers for particular scans:

All of that feels about right. Like, none of those numbers are terribly surprising to me. The highly variable ones (aorta, kidney) probably had more to do with the specific methodologies of examined papers - I doubt very much it’s something inherent to those scans, which are both easier than the cardiac exams.

There are a lot of ways to assess competence, and probably not one best way to do it except the one that makes the most since for you and your learners. There were quite a few studies that measured to ‘plateau’, or when learners weren’t really getting any better with additional scans. As the authors point out, this doesn’t necessarily endorse competence, but the study was more about identifying learning curves. At the plateau point you’d need to assess whether the learner’s doing passable work or whether additional teaching/proctored scanning is required. A host of other studies used competence defining thresholds for performance, which represents a better endpoint, I think. But then you’re setting the standard for competence, too, and that’s generally a matter of expert opinion.

So what’s my takeaway? I miss the old grilled stuft burrito and 20 is a pretty good number for heart/FAST/kidney, while 10 might do it for lung, IVC, and skin/soft tissue. And you can cut learners lose on bladders pronto. The numbers, I think, can work more as check-in points - when someone hits 20 cardiac scans they might be ready to test for competence. I think some of the certifications in the UK work that way, where it’s not necessarily the number of scans itself that certifies but reaching that threshold as a minimum requirement to register for testing.

I leave you with this unrelated exchange between Jimmy Pesto Jr. and Calvin Fischoeder (Bob’s Burgers):

Jimmy Jr.: It smells weird in here.

Fischoeder: It smells weird everywhere, sir. That’s how you know you’re alive.